Technical SEO is the foundation every other SEO effort sits on. Without it, your content, backlinks, and keyword strategy are built on sand. This technical SEO guide breaks down the exact processes, tools, and fixes you need to optimize your website for search engines in 2026 — from Core Web Vitals and crawlability to schema markup and site audits. Whether you’re running a small business site or managing enterprise-level architecture, this is the playbook.

I’ve audited hundreds of websites over the past decade. The pattern is always the same: sites that nail the technical fundamentals outrank sites with better content but broken infrastructure. A beautifully written blog post means nothing if Googlebot can’t crawl it, your server takes 6 seconds to respond, or your mobile layout shifts every time an image loads.

This guide consolidates everything I know about technical SEO into one actionable resource. Bookmark it. Reference it. Use the checklist at the end every time you launch or audit a site.

What Is Technical SEO? (And Why It Matters)

Technical SEO is the practice of optimizing your website’s infrastructure so search engines can efficiently crawl, index, render, and rank your pages. It covers server configuration, site speed, mobile responsiveness, structured data, security, URL architecture, and everything that happens behind the content itself.

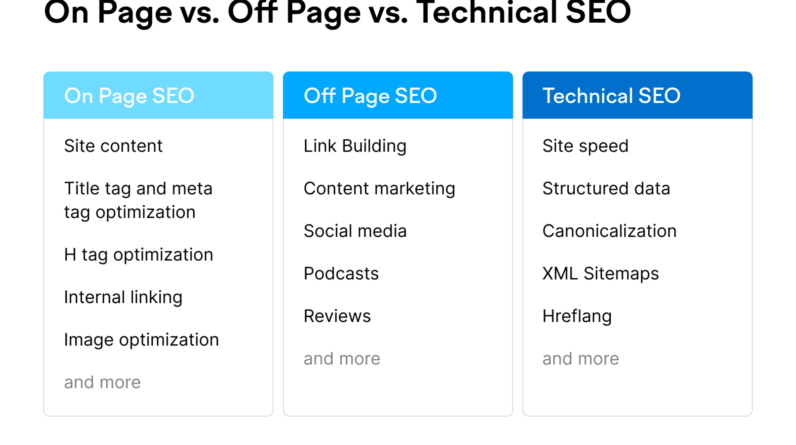

Think of it this way: if your website were a house, technical SEO is the foundation, plumbing, and electrical. On-page SEO is the interior design. Off-page SEO is your reputation in the neighborhood. You can have stunning furniture, but if the roof leaks and the wiring sparks, nobody’s moving in.

Technical SEO vs On-Page vs Off-Page

| Category | What It Covers | Examples |

|---|---|---|

| Technical SEO | Website infrastructure and crawlability | Site speed, HTTPS, robots.txt, XML sitemaps, schema markup, mobile responsiveness, Core Web Vitals |

| On-Page SEO | Content and HTML elements on individual pages | Title tags, meta descriptions, header hierarchy, keyword placement, internal linking, image alt text |

| Off-Page SEO | External signals and authority building | Backlinks, brand mentions, digital PR, social signals, guest posting |

The reality? These three categories overlap constantly. Internal linking is both on-page and technical. Schema markup affects how your content appears in SERPs. A slow server kills your content’s ability to rank regardless of how well-optimized it is. That’s why this guide touches all three — because in practice, you can’t separate them cleanly.

How Technical Issues Kill Rankings

Here’s what I see on nearly every audit I run: the site owner has invested in content, maybe even hired an SEO consultant for keyword research, but they’re hemorrhaging rankings because of fixable technical problems. Common killers include:

- Blocked crawling — A misconfigured robots.txt that disallows critical directories. I’ve seen entire blog sections invisible to Google because someone added a stray

Disallow: /blog/during a migration. - Slow server response — Time to First Byte (TTFB) over 1 second. Cheap shared hosting is the usual culprit.

- Broken canonical tags — Duplicate content issues that split ranking signals across multiple URLs.

- Missing HTTPS — Still? Yes. In 2026, I still find sites running on HTTP. Google has treated HTTPS as a ranking signal since 2014.

- Mobile usability failures — Text too small, tap targets overlapping, horizontal scrolling. Google’s mobile-first indexing means your mobile experience is your ranking experience.

A client came to me with a 40-page site stuck on page 3 for every target keyword. Content quality was decent. Backlink profile was reasonable. The problem? Their hosting provider’s server response time averaged 3.8 seconds, they had 47 crawl errors in Search Console, and their XML sitemap hadn’t been updated in two years. We fixed the technical issues — new hosting, fixed crawl errors, fresh sitemap, proper canonicals — and 14 of their target keywords moved to page 1 within 60 days. The content didn’t change. The infrastructure did.

Site Speed and Core Web Vitals

Page speed isn’t a vanity metric. It’s a confirmed ranking factor, and since Google’s Page Experience update, it’s measured through three specific Core Web Vitals (CWV). If your site fails these thresholds, you’re actively losing rankings to faster competitors.

LCP, INP, CLS: What They Are and How to Fix Them

| Metric | What It Measures | Good | Needs Improvement | Poor | Common Fixes |

|---|---|---|---|---|---|

| LCP (Largest Contentful Paint) | Time for the largest visible element to load | <2.5s | 2.5–4.0s | >4.0s | Optimize hero images, preload critical assets, upgrade hosting, implement CDN |

| INP (Interaction to Next Paint) | Responsiveness to user interactions | <200ms | 200–500ms | >500ms | Reduce JavaScript execution time, defer non-critical scripts, break up long tasks |

| CLS (Cumulative Layout Shift) | Visual stability — how much the layout shifts during loading | <0.1 | 0.1–0.25 | >0.25 | Set explicit image dimensions, use font-display: swap, reserve space for ads/embeds |

LCP is where most sites fail. The biggest offender? Unoptimized hero images. I worked with a client whose homepage hero was a 2.4MB PNG. After converting to WebP (187KB), adding fetchpriority="high", and preloading the image, their LCP dropped from 4.2 seconds to 1.8 seconds. Rankings for their primary keyword improved 12 positions in three weeks.

CLS is the sneakiest problem. You might not even notice layout shifts during testing because your browser caches assets. But a first-time visitor on a 4G connection sees elements jumping around the page as images load without dimensions and web fonts swap in. Always set explicit width and height attributes on images, and use font-display: swap for custom fonts.

Speed Optimization Checklist (Server, Caching, CDN, Compression)

Speed optimization operates at multiple layers. Here’s the full stack, prioritized by impact:

- Server response time (TTFB) — Target under 200ms. Upgrade hosting if needed. Shared hosting almost never hits this target.

- CDN deployment — Cloudflare (free tier works for most sites), or Fastly/Cloudfront for enterprise. Serves assets from the closest edge node to the user.

- Image optimization — Convert to WebP or AVIF. Compress to under 200KB per image. Use

srcsetfor responsive images. Lazy load below-the-fold images (never lazy load the hero image — that kills LCP). - Browser caching — Set

Cache-Controlheaders for static assets. Minimum 1 year for versioned files (CSS, JS with hashes in filenames). - Compression — Enable Gzip or Brotli compression on the server. Brotli achieves 15-20% better compression than Gzip.

- Critical CSS — Inline above-the-fold CSS in the

<head>. Load the rest asynchronously. - JavaScript deferral — Use

deferorasyncon non-critical scripts. Move analytics and third-party scripts below the fold or load via facade pattern. - Minification — Minify CSS, JavaScript, and HTML. Tools: Terser (JS), cssnano (CSS), html-minifier.

Hosting and Its Impact on Performance

Your hosting choice creates a performance ceiling that no amount of optimization can break through. If your server takes 800ms to respond, your page will never load in under 800ms. Period.

For most small business and content sites, I recommend managed WordPress hosting (Cloudways, Kinsta, WP Engine) or a static hosting solution (Vercel, Netlify) for headless setups. Shared hosting from budget providers typically delivers TTFB of 500ms-2 seconds, which makes passing Core Web Vitals nearly impossible. The difference between $5/month shared hosting and $25/month managed hosting can be the difference between page 1 and page 3.

Measuring Speed with PageSpeed Insights and GTmetrix

Two tools I use on every audit:

- Google PageSpeed Insights (PSI) — Uses real-world Chrome User Experience Report (CrUX) data plus Lighthouse lab tests. The “field data” section shows actual user experience. This is what Google uses for ranking. pagespeed.web.dev

- GTmetrix — Provides waterfall charts that show exactly which resources are loading in what order. Invaluable for identifying bottlenecks. The free tier tests from Vancouver; paid plans let you test from global locations.

Pro tip: always test PSI at least 3 times and take the median score. Single test results can vary by 10-15 points due to network conditions and server load.

Mobile Optimization and Responsive Design

Since Google completed its shift to mobile-first indexing, the mobile version of your site is the primary version Google evaluates. If your desktop site is flawless but your mobile experience is broken, your rankings suffer across the board — desktop included.

Mobile-First Indexing: What You Need to Know

Mobile-first indexing means Google predominantly uses the mobile version of your content for indexing and ranking. This has been the default for all new sites since July 2019, and Google completed the migration for all sites by late 2023. What this means practically:

- If content exists on desktop but not mobile, Google may not index it

- If your mobile page loads slowly, your desktop rankings also suffer

- Structured data must be present on the mobile version

- Images, videos, and media need to be accessible on mobile

Check your indexing status in Google Search Console under Settings > Crawling. If your site is on mobile-first indexing (it almost certainly is), every technical optimization should be tested on mobile first.

Responsive Design Best Practices

Responsive design uses CSS media queries and flexible layouts to adapt to any screen size. Here’s what actually matters for SEO:

- Viewport meta tag —

<meta name="viewport" content="width=device-width, initial-scale=1">in the<head>. Without this, mobile browsers render your page at desktop width and zoom out. - Fluid images —

max-width: 100%; height: auto;prevents images from overflowing their containers. Combine withsrcsetto serve appropriately sized images. - Touch targets — Buttons and links need minimum 48x48px tap targets with at least 8px spacing. Google’s mobile usability report flags violations.

- No horizontal scrolling — If any element is wider than the viewport, you fail the mobile usability test. Common culprits: fixed-width tables, oversized images, code blocks without

overflow-x: auto. - Readable font sizes — Minimum 16px base font size. Anything smaller triggers “text too small to read” warnings.

Mobile UX and Its Impact on Rankings

Google measures mobile UX through Core Web Vitals (especially INP and CLS on mobile), mobile usability reports, and behavioral signals. A site that’s technically responsive but practically unusable — tiny buttons, intrusive interstitials, content hidden behind accordions — will underperform.

The most common mobile UX mistake I see? Intrusive interstitials. Pop-ups that cover the main content on mobile devices have been a negative ranking factor since January 2017. Small cookie consent banners are fine. Full-screen “subscribe to our newsletter” pop-ups on page load are not.

Crawlability and Indexing

If search engines can’t find and access your pages, nothing else matters. Crawlability is the most fundamental layer of technical SEO — and it’s where I find critical errors on roughly 60% of the sites I audit.

How Googlebot Crawls Your Site

Googlebot discovers URLs through links, sitemaps, and previously known URLs. It then prioritizes which URLs to crawl based on factors like page importance, freshness, and crawl budget. Here’s the simplified process:

- Discovery — Googlebot finds a URL (via sitemap, internal link, external link, or re-crawl)

- Robots.txt check — Is this URL allowed? If disallowed, Googlebot stops here.

- Fetch — Downloads the HTML (and potentially renders JavaScript)

- Render — Processes JavaScript to see the final page state

- Index — Adds the page to Google’s index (or updates the existing entry)

- Rank — Evaluates the page against ranking algorithms for relevant queries

Understanding this pipeline helps you diagnose where issues occur. If a page isn’t ranking, is it because it’s not crawled? Not indexed? Or indexed but deemed low quality? Google Search Console’s URL Inspection tool tells you exactly where in this pipeline a URL stands.

Robots.txt, Sitemaps, and Crawl Budget

robots.txt controls which parts of your site crawlers can access. It lives at yourdomain.com/robots.txt. Here’s a solid baseline configuration:

User-agent: *

Allow: /

Disallow: /wp-admin/

Disallow: /cart/

Disallow: /checkout/

Disallow: /my-account/

Disallow: /search/

Disallow: /*?s=

Disallow: /*?add-to-cart=

Sitemap: https://yourdomain.com/sitemap.xmlKey rules: never block CSS or JavaScript files (Googlebot needs them to render your pages). Never block images unless they’re genuinely private. Always declare your sitemap location.

XML Sitemaps tell search engines which URLs you consider important and when they were last modified. Requirements:

- Maximum 50,000 URLs per sitemap file (use sitemap index files for larger sites)

- Maximum 50MB uncompressed per file

- Include only indexable, canonical URLs

- Update

<lastmod>dates when content actually changes (not automatically on every build) - Submit in Google Search Console under Sitemaps

Crawl budget matters primarily for large sites (10,000+ pages). For smaller sites, Google will typically crawl everything. But if you’re running an e-commerce site with thousands of product variants, filter pages, and pagination, you need to manage crawl budget carefully by noindexing low-value pages and blocking faceted navigation parameters in robots.txt.

Fixing Crawl Errors in Search Console

Google Search Console’s Pages report (formerly Coverage report) shows indexing issues. The errors I see most frequently:

- 404 Not Found — Broken URLs, usually from deleted pages or changed slugs. Fix with 301 redirects to the most relevant existing page.

- “Crawled – currently not indexed” — Google found the page but chose not to index it. Usually a quality signal. Improve the content, add internal links to the page, or consolidate with a stronger page.

- “Discovered – currently not indexed” — Google knows the URL exists but hasn’t crawled it yet. Often a crawl budget issue. Improve internal linking to the page and ensure it’s in your sitemap.

- Redirect errors — Redirect chains (A → B → C) or redirect loops. Flatten chains to single hops. Fix loops immediately.

- “Blocked by robots.txt” — You told Google not to crawl this URL. If it should be indexed, update your robots.txt.

URL Structure and Canonicalization

Clean URL structure isn’t just aesthetic — it affects crawlability, user experience, and keyword signals. Best practices:

- Keep URLs short and descriptive:

/technical-seo-guide/beats/blog/2026/02/the-complete-technical-seo-guide-for-beginners-and-experts/ - Use hyphens, not underscores:

/site-speed/not/site_speed/ - Lowercase only: URLs are case-sensitive on most servers

- Avoid parameters when possible:

/shoes/red/beats/shoes?color=red - Include a trailing slash consistently (either always or never — pick one and stick with it)

Canonical tags tell search engines which version of a URL is the “master” version. Every indexable page should have a self-referencing canonical tag:

<link rel="canonical" href="https://yourdomain.com/technical-seo-guide/" />Common canonical mistakes: pointing canonicals to 404 pages, using relative URLs instead of absolute, and having conflicting canonical declarations between the HTML and HTTP headers.

On-Page SEO Technical Elements

On-page SEO bridges the gap between content and technical infrastructure. These elements live in your HTML and directly influence how search engines understand and rank your pages. For a broader look at how these fit into an overall SEO strategy, see our SEO for Small Business guide.

Title Tags, Meta Descriptions, and Header Hierarchy

Title tags remain one of the strongest on-page ranking signals. Rules I follow:

- 50-60 characters (Google truncates at roughly 580 pixels)

- Primary keyword near the front

- Unique per page — no duplicate titles across your site

- Include a compelling reason to click (number, year, benefit)

- Format:

Primary Keyword — Supporting Detail | Brand

Meta descriptions don’t directly affect rankings, but they heavily influence click-through rate. A well-written meta description at position 5 can outperform a generic one at position 3. Keep them 150-160 characters, include the target keyword (it gets bolded in SERPs), and write them as a value proposition, not a summary.

Header hierarchy provides semantic structure. One H1 per page (matching the primary topic), H2s for major sections, H3s for subsections. Never skip levels — going from H2 directly to H4 breaks the document outline. Each H2 section should contain at least 150 words of substantive content.

Strategic Keyword Placement

Keyword stuffing is dead. Strategic keyword placement is not. Here’s where to include your target keywords for maximum signal with zero penalty risk:

- First 100 words — Include your primary keyword naturally in the opening paragraph

- H1 tag — Your primary keyword should appear in the page title

- At least one H2 — Include a variation of the primary keyword

- Image alt text — Describe the image accurately; include keywords only when relevant

- URL slug — Short, keyword-focused

- Last 100 words — Reinforces the topic for search engines processing the full page

Target keyword density between 0.8-1.5% for your primary term. That’s roughly 8-15 mentions in a 1,000-word article. If you’re writing naturally and staying on topic, you’ll usually hit this range without trying.

Image Optimization (Format, Size, Alt Text, Lazy Loading)

Images account for approximately 40% of a typical page’s total weight. Image optimization is simultaneously a speed play and a rankings play. Here’s the full technical stack:

| Factor | Action | Impact |

|---|---|---|

| File format | Use WebP or AVIF; keep JPEG fallback for older browsers | 50-80% smaller files than PNG |

| File size | Compress to under 200KB per image | Direct LCP improvement |

| File names | Descriptive, hyphenated, lowercase: technical-seo-audit-checklist.webp |

Google Images ranking signal |

| Alt text | Describe the image content; include keyword only if naturally relevant | Accessibility + image search |

| Dimensions | Always set width and height attributes |

Prevents CLS |

| Lazy loading | Add loading="lazy" to below-fold images; never on hero image |

Faster initial load |

| Responsive | Use srcset and sizes attributes |

Correct size per device |

| Image sitemap | Submit to Google Search Console | Improved image indexing |

A critical mistake: lazy loading your hero image or logo. This can spike LCP from 1.2 seconds to 3.8 seconds because the browser delays loading the largest visible element. The hero image should use fetchpriority="high" and loading="eager" (or simply omit the loading attribute).

Internal Linking Architecture

Internal links distribute PageRank, establish topical relationships, and help search engines discover and prioritize your content. Yet internal linking is the most neglected aspect of on-page SEO on most sites I audit.

Best practices:

- Hub-and-spoke model — Pillar pages link to cluster pages; cluster pages link back. This signals topical authority to Google.

- Descriptive anchor text — “technical SEO audit checklist” tells Google more than “click here” or “read more”

- 3-5 internal links minimum per page — More for pillar content, fewer for short posts

- Link to deep pages — Don’t just link to your homepage and top categories. Orphan pages (pages with zero internal links) are invisible to both users and crawlers.

- Fix broken internal links — Use Screaming Frog or Ahrefs Site Audit to find them. Every broken internal link is wasted link equity.

When building a content strategy, map internal links intentionally. Every new piece of content should link to 3-5 existing relevant pages, and those existing pages should be updated to link back. For more on how AI tools can help with this process, check out our best AI tools for business owners guide.

Structured Data and Schema Markup

Structured data helps search engines understand what your content is about, not just what it says. Schema markup is the language you use to provide that structured data, typically implemented as JSON-LD in your page’s <head>. It’s what powers rich results — star ratings, FAQ dropdowns, how-to steps, product prices, and event details directly in search results.

JSON-LD Schema Types (Article, FAQ, HowTo, Product, LocalBusiness)

| Schema Type | Use Case | Rich Result | Priority Fields |

|---|---|---|---|

| Article / BlogPosting | Blog posts, news articles, guides | Article appearance, author info | headline, author, datePublished, dateModified, image |

| FAQPage | Pages with question-answer content | Expandable FAQ dropdowns in SERPs | mainEntity → Question/Answer pairs |

| HowTo | Step-by-step tutorials | Step display in search results | step (name + text), totalTime, tool, supply |

| Product | E-commerce product pages | Price, availability, ratings in SERPs | name, offers (price, availability), aggregateRating |

| LocalBusiness | Local service businesses | Business info in local pack | name, address, geo, telephone, openingHours |

| Organization | Company/brand pages | Knowledge panel data | name, url, logo, sameAs, contactPoint |

How to Implement Schema Markup

JSON-LD is the recommended format (Google’s preference over Microdata or RDFa). Place it in the <head> or <body> of your HTML. Here’s an example for an Article with FAQ:

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Technical SEO Guide: Optimize Your Website",

"author": {

"@type": "Person",

"name": "Dr. Matt",

"url": "https://atlasmarketing.ai/about/"

},

"datePublished": "2026-02-06",

"dateModified": "2026-02-06",

"publisher": {

"@type": "Organization",

"name": "Atlas Marketing",

"url": "https://atlasmarketing.ai"

},

"description": "Complete technical SEO guide covering Core Web Vitals, crawlability, schema markup, site audits, and more.",

"mainEntityOfPage": {

"@type": "WebPage",

"@id": "https://atlasmarketing.ai/technical-seo-guide/"

}

}

</script>For FAQ schema, you add it as a separate JSON-LD block or combine it with the Article schema. Each question-answer pair becomes a mainEntity item within a FAQPage type. The key: only mark up genuine Q&A content that’s visible on the page. Using FAQ schema for content that doesn’t appear on the page violates Google’s guidelines.

Testing and Validating Schema

Always test before publishing. Two essential tools:

- Google Rich Results Test — Shows which rich results your page is eligible for and flags errors

- Schema.org Validator — Validates syntax and checks for missing recommended properties

Common validation errors: missing required fields (e.g., image for Article schema), incorrect nesting of types, and using deprecated properties. Fix all errors and as many warnings as possible before publishing.

Measuring Schema Impact on CTR and Rankings

Schema doesn’t directly boost rankings (Google has stated this), but it dramatically impacts click-through rates. FAQ rich results can increase SERP real estate by 2-3x. Product schema with star ratings consistently outperforms listings without them. In my experience, implementing comprehensive schema across a site typically yields a 15-30% CTR improvement within 60 days.

Track schema performance in Google Search Console under the “Enhancements” section. It shows impressions and clicks for each rich result type, along with any errors that need fixing. For sites competing for AI Overview inclusion, well-structured schema can improve your chances of being cited — AI engines parse structured data to understand content relationships.

SEO Site Audits: Finding and Fixing Issues

An SEO audit is a systematic evaluation of your website’s search engine health. I run one before starting any optimization work — you can’t fix what you haven’t diagnosed. Think of it as an MRI for your website.

What an SEO Audit Covers

A comprehensive technical SEO audit examines:

- Crawl health — Crawl errors, redirect chains, orphan pages, robots.txt issues

- Indexing status — Which pages are indexed, which aren’t, and why

- Page speed — Core Web Vitals for both mobile and desktop

- On-page elements — Title tags, meta descriptions, header hierarchy, keyword usage

- Content quality — Thin content, duplicate content, cannibalization

- Internal linking — Orphan pages, link equity distribution, anchor text

- Mobile usability — Responsive design, tap targets, font sizes

- Structured data — Schema implementation and validation

- Security — HTTPS status, mixed content issues

- Backlink profile — Toxic links, anchor text distribution, referring domain quality

How to Conduct a Technical Audit (Step by Step)

Here’s my exact process — the same one I use for every client engagement:

- Crawl the site — Run Screaming Frog (up to 500 URLs free) or Sitebulb. Export all URLs with status codes, titles, meta descriptions, H1s, word counts, and canonical tags.

- Check Google Search Console — Review the Pages report for indexing issues. Check the Performance report for keyword cannibalization (multiple URLs ranking for the same keyword).

- Run PageSpeed Insights — Test your 10 highest-traffic pages. Note Core Web Vitals scores for mobile.

- Audit internal links — Identify orphan pages (no inbound internal links). Map your hub-and-spoke structure. Find broken internal links.

- Review structured data — Run key pages through the Rich Results Test. Check for missing schema on content types that should have it.

- Analyze backlinks — Use Ahrefs or SEMrush to review referring domains. Identify toxic links for disavow consideration.

- Mobile test — Run Google’s Mobile-Friendly Test on 5-10 representative pages.

- Security check — Verify HTTPS on all pages. Check for mixed content warnings. Confirm HTTP → HTTPS redirects work correctly.

- Compile findings — Create a prioritized list of issues with estimated impact (high/medium/low) and effort (high/medium/low).

- Build an action plan — Start with high-impact, low-effort fixes. Schedule larger projects by quarter.

Best Audit Tools (Screaming Frog, Ahrefs, GSC)

| Tool | Best For | Price | Key Features |

|---|---|---|---|

| Screaming Frog | Technical crawling | Free (500 URLs) / $259/yr | Full site crawl, redirect mapping, duplicate detection, custom extraction |

| Google Search Console | Indexing and performance data | Free | Index coverage, performance reports, URL inspection, Core Web Vitals |

| Ahrefs Site Audit | Comprehensive SEO auditing | From $129/mo | 100+ SEO checks, internal link analysis, content quality scores |

| Semrush Site Audit | Ongoing monitoring | From $139.95/mo | Automated weekly crawls, progress tracking, thematic reports |

| Sitebulb | Visual technical audits | From $13.50/mo | Visual crawl maps, priority hints, accessibility checks |

| PageSpeed Insights | Core Web Vitals | Free | Lab + field data, CWV metrics, Lighthouse recommendations |

My recommendation for small businesses: Google Search Console (free) + Screaming Frog (free tier). This combination catches 80% of technical issues. Add Ahrefs when you’re ready to invest in deeper competitive analysis and backlink monitoring. See our tools and reviews section for detailed comparisons.

Prioritizing Fixes by Impact

Not all SEO issues are equal. After every audit, I categorize fixes into a priority matrix:

- Critical (fix this week) — Blocked crawling (robots.txt errors, noindex on important pages), site-wide HTTPS issues, server errors (5xx), massive CLS or LCP failures

- High (fix this month) — Missing or duplicate title tags, broken redirects, orphan pages with ranking potential, missing schema on key page types

- Medium (fix this quarter) — Thin content pages, suboptimal internal linking, image optimization, minor CWV improvements

- Low (backlog) — Cosmetic URL improvements, minor meta description tweaks, adding alt text to decorative images

Focus your energy on the critical and high-priority items first. I’ve seen sites jump 15-20 positions just by fixing a misconfigured robots.txt file that was blocking their entire blog directory.

Off-Page SEO and Link Building

Off-page SEO encompasses everything that happens outside your website that affects your rankings. Backlinks remain the dominant off-page signal, but brand mentions, social proof, and digital PR increasingly contribute to how search engines (and AI engines) evaluate your authority.

Why Backlinks Still Matter in 2026

Despite years of predictions that “links are dead,” backlinks remain one of Google’s top 3 ranking factors. The difference in 2026? Quality has completely overtaken quantity. One contextual link from a relevant, authoritative site is worth more than 100 directory submissions or forum signatures.

What constitutes a quality backlink:

- From a topically relevant site (a marketing blog linking to SEO content > a cooking blog linking to SEO content)

- Editorially placed (someone chose to link to you because your content adds value)

- From a page that itself has authority (check referring page’s URL Rating, not just domain authority)

- With natural anchor text (not exact-match keywords every time)

- From a unique referring domain (10 links from 10 different sites beats 100 links from 1 site)

Link Building Strategies That Work

These are the strategies I use and recommend in 2026 — no black hat, no spam, no risk of penalty:

- Create link-worthy content — Original research, industry surveys, comprehensive guides (like this one), free tools, unique data visualizations. If your content is genuinely the best resource on a topic, links come naturally over time.

- Guest posting (selectively) — Write for relevant, authoritative publications in your industry. Focus on sites with real audiences, not link farms disguised as blogs. One post on a respected industry site beats 20 posts on generic “write for us” sites.

- Broken link building — Find broken external links on relevant sites (Ahrefs Content Explorer makes this easy). Reach out offering your content as a replacement. High success rate because you’re helping them fix a problem.

- HARO / Connectively / Quoted — Respond to journalist queries as an expert source. When quoted, you typically receive a backlink from high-authority news sites.

- Resource page outreach — Find “best resources” or “recommended tools” pages in your niche. If your content genuinely belongs there, pitch it.

- Competitor backlink analysis — Use Ahrefs to see who links to your competitors. If they link to inferior content on the same topic, you have a pitch angle.

Digital PR and Brand Mentions

Digital PR is link building’s evolved form. Instead of begging for links, you create newsworthy assets — original research, data studies, expert commentary on trending topics — and pitch them to journalists and publications.

Brand mentions (even without links) are increasingly valuable. Google’s patent on “implied links” suggests that unlinked brand mentions contribute to authority signals. Combined with the rise of AI engines that rely on entity recognition, being mentioned across the web — in forums, news articles, social media, and industry publications — builds the kind of authority that translates to both traditional and AI search visibility.

Social Signals and Content Distribution

Social media shares aren’t a direct ranking factor (Google has confirmed this repeatedly). But social distribution serves SEO indirectly:

- Discovery — Content shared on social platforms gets seen by bloggers, journalists, and content creators who might link to it

- Indexing speed — Heavily shared content often gets crawled faster

- Brand signals — Active social presence correlates with the kind of authority signals Google does track

- AI engine signals — AI engines like ChatGPT and Perplexity reference social discussions, Reddit threads, and community consensus when generating answers

Don’t ignore content distribution. Publishing a great article and waiting for organic traffic is a losing strategy in 2026. Share it on LinkedIn, relevant subreddits, industry Slack communities, and X. Repurpose it into video, infographics, and social carousels. Every touchpoint increases the probability of earning links and mentions.

User Experience as a Ranking Factor

Google’s ranking systems increasingly reward sites that provide genuinely good user experiences. This isn’t theoretical — Core Web Vitals, page experience signals, and behavioral data all feed into ranking decisions.

UX Signals Google Tracks

While Google doesn’t publicly confirm using all UX metrics for ranking, here’s what we know they measure or have the ability to measure:

- Core Web Vitals (LCP, INP, CLS) — Confirmed ranking signals

- Mobile friendliness — Confirmed ranking signal

- HTTPS — Confirmed ranking signal

- No intrusive interstitials — Confirmed negative signal

- Pogo-sticking — Users clicking a result, immediately returning to SERPs, and clicking a different result. Strong indirect signal of content quality mismatch.

- Dwell time / time on page — Not officially confirmed, but patent filings and Chrome data suggest it matters for query satisfaction metrics

The practical takeaway: build pages that genuinely satisfy user intent. If someone searches “how to fix robots.txt errors” and lands on your page, they should find a clear, complete answer without needing to go back to Google. That behavior signal — query satisfied, search session ended — is the ultimate UX ranking factor.

Navigation and Site Architecture

Good site architecture serves both users and crawlers. The “3-click rule” is oversimplified, but the principle holds: important content should be easily reachable from any page on your site.

- Flat hierarchy — Keep important pages within 3 levels of the homepage

- Breadcrumbs — Show users (and search engines) where they are in the site structure. Implement with BreadcrumbList schema.

- Logical categorization — Group related content into clear categories and subcategories. Your SEO Strategy section should link to all SEO-related content.

- Persistent navigation — Key pages (services, blog, contact, about) should be accessible from every page via the main nav

- Footer links — Use the footer for secondary navigation: legal pages, resource categories, popular posts

Content Readability and Accessibility

Readability directly impacts engagement metrics. Content that’s hard to read gets abandoned. Accessibility isn’t just ethical — it’s practical SEO.

- Flesch-Kincaid Grade Level — Target 7th-9th grade for most web content (lower for consumer audiences, higher for B2B technical content)

- Short paragraphs — 2-4 sentences max on the web. Walls of text kill engagement.

- Subheadings every 200-300 words — Scannable structure lets users find what they need fast

- Semantic HTML — Proper heading hierarchy, lists for list content, tables for tabular data. Screen readers depend on this.

- Alt text on all meaningful images — Descriptive, not stuffed with keywords

- Sufficient color contrast — WCAG 2.1 AA minimum (4.5:1 ratio for body text)

- Skip navigation links — Allow keyboard users to bypass repetitive navigation

Accessibility improvements often have a side effect of improving SEO. Semantic HTML helps search engines understand content structure. Alt text feeds image search. Proper heading hierarchy establishes topical hierarchy. When you build for accessibility, you build for SEO simultaneously.

Website Security (HTTPS) and Trust Signals

HTTPS has been a Google ranking signal since 2014, and in 2026, there’s zero excuse for running a site on HTTP. But security goes beyond SSL certificates — trust signals affect both user behavior and search engine evaluation, especially for YMYL (Your Money or Your Life) content.

SSL Certificates and HTTPS Migration

If you’re still on HTTP (check by looking at your URL bar), here’s the migration process:

- Obtain an SSL certificate (most hosting providers offer free Let’s Encrypt certificates; Cloudflare provides free SSL via their proxy)

- Install the certificate on your server

- Update all internal links from

http://tohttps:// - Set up 301 redirects from all HTTP URLs to their HTTPS equivalents

- Update your sitemap to use HTTPS URLs

- Update your canonical tags to use HTTPS

- Add the HTTPS property to Google Search Console

- Update any hardcoded HTTP references in CSS, JavaScript, and image paths (mixed content)

Mixed content is the most common post-migration issue. Your page loads over HTTPS, but some resources (images, scripts, stylesheets) still load over HTTP. Browsers flag this with a warning, and it undermines the security benefits. Use browser developer tools (Console tab) or Why No Padlock? to find and fix mixed content.

Privacy Policy, Contact Pages, and Trust Elements

Google’s Search Quality Rater Guidelines specifically mention trust signals when evaluating page quality. These elements don’t have a direct ranking weight, but they contribute to your site’s overall trustworthiness assessment:

- Privacy Policy — Required by law in most jurisdictions (GDPR, CCPA). Google expects it.

- Terms of Service — Especially important for e-commerce and SaaS sites

- Contact page — Physical address, phone number, email. For local businesses, this feeds into local SEO signals.

- About page — Author bios with credentials, company history, team information. Critical for E-E-A-T.

- Clear attribution — Who wrote this content? What are their qualifications? Named authors with visible bios outperform anonymous content.

- Testimonials and reviews — Social proof of real business activity

For YMYL topics (health, finance, legal), trust signals are even more critical. Google holds these pages to a higher standard, and missing trust elements can prevent ranking entirely.

Technical SEO Checklist (Quick Reference)

Use this checklist as a quick reference for every site you build or audit. Each item is linked to the relevant section above.

| Category | Check | Target / Standard | Priority |

|---|---|---|---|

| Speed | LCP (Largest Contentful Paint) | <2.5 seconds | Critical |

| Speed | INP (Interaction to Next Paint) | <200ms | Critical |

| Speed | CLS (Cumulative Layout Shift) | <0.1 | Critical |

| Speed | TTFB (Time to First Byte) | <200ms (server) / <800ms (total) | High |

| Speed | Images compressed (WebP/AVIF, <200KB) | All images optimized | High |

| Speed | CDN deployed | Cloudflare or equivalent active | High |

| Speed | Browser caching enabled | Cache-Control headers set | Medium |

| Speed | Gzip/Brotli compression | Enabled on server | Medium |

| Mobile | Viewport meta tag present | width=device-width, initial-scale=1 | Critical |

| Mobile | Touch targets ≥ 48x48px | Minimum size with 8px spacing | High |

| Mobile | No horizontal scrolling | All content within viewport | High |

| Mobile | Font size ≥ 16px | Base body text readable | Medium |

| Crawl | robots.txt properly configured | No critical directories blocked | Critical |

| Crawl | XML sitemap submitted | In GSC, <50K URLs per file | Critical |

| Crawl | Crawl errors resolved | Zero 5xx errors, minimal 404s | High |

| Crawl | Canonical tags on all indexable pages | Self-referencing, absolute URLs | High |

| Crawl | No redirect chains (>1 hop) | All redirects are single 301s | Medium |

| On-Page | Unique title tag per page | 50-60 characters, keyword-optimized | Critical |

| On-Page | Meta description per page | 150-160 characters, compelling CTA | High |

| On-Page | One H1 per page | Contains primary keyword | Critical |

| On-Page | Proper heading hierarchy (H1→H2→H3) | No skipped levels | High |

| On-Page | Image alt text on all meaningful images | Descriptive, not keyword-stuffed | High |

| On-Page | 3-5+ internal links per page | Descriptive anchor text | High |

| Schema | JSON-LD schema implemented | Appropriate type for content | High |

| Schema | Schema validated (zero errors) | Rich Results Test passed | High |

| Security | HTTPS active | Valid SSL, no mixed content | Critical |

| Security | HTTP → HTTPS redirects | All HTTP URLs 301 to HTTPS | Critical |

| Trust | Privacy policy page | Linked from footer | High |

| Trust | Contact page with real info | Address, phone, email | High |

| Trust | About page with author credentials | E-E-A-T signals present | High |

| UX | No intrusive interstitials | No full-screen pop-ups on mobile | High |

| UX | Breadcrumb navigation | With BreadcrumbList schema | Medium |

| UX | Content accessible within 3 clicks | Flat site architecture | Medium |

Frequently Asked Questions

What is the most important technical SEO factor in 2026?

Core Web Vitals — specifically LCP (Largest Contentful Paint). Site speed is the one technical factor that impacts every other ranking signal. A slow site gets crawled less, indexed less, and abandoned by users more. If you fix only one thing, make your site fast. Target LCP under 2.5 seconds on mobile.

How often should I run a technical SEO audit?

Comprehensive audits quarterly. Monitoring crawl errors and Core Web Vitals should be weekly (set up alerts in Google Search Console). After any major site change — migration, redesign, CMS update, new plugin — run an immediate audit. Issues introduced during changes can tank rankings within days if not caught.

Does structured data (schema markup) directly improve rankings?

Google has stated that schema markup is not a direct ranking factor. However, it significantly improves click-through rates through rich results (star ratings, FAQ dropdowns, how-to steps), and higher CTR is a positive user signal. Schema also helps AI engines like ChatGPT and Claude understand and cite your content. The indirect ranking benefits are substantial.

Is link building still worth the effort?

Yes, but the approach has evolved. Mass outreach and low-quality guest posting are largely ineffective. What works in 2026: creating genuinely link-worthy content (original research, comprehensive guides, free tools), digital PR, HARO/Connectively responses, and broken link building. Quality over quantity — one relevant, authoritative link outperforms dozens of low-quality ones.

How do I prioritize technical SEO fixes when there are hundreds of issues?

Use an impact-effort matrix. Fix high-impact, low-effort issues first (misconfigured robots.txt, missing HTTPS redirects, broken canonical tags). Then move to high-impact, high-effort items (server migration for speed, site architecture overhaul). Ignore low-impact issues until the critical and high-priority items are resolved. The 80/20 rule applies: 20% of fixes typically resolve 80% of ranking barriers.

What’s the difference between crawling and indexing?

Crawling is when Googlebot downloads your page. Indexing is when Google adds that page to its search database. A page can be crawled but not indexed (Google decided the content wasn’t worth including). A page can also be discovered but not crawled (Google knows the URL but hasn’t fetched it yet). Use the URL Inspection tool in Search Console to see exactly where a URL stands in this pipeline.

How do Core Web Vitals affect AI search rankings?

AI search engines (Google AI Mode, ChatGPT Search, Perplexity) strongly prefer fast-loading, well-structured sites. Sites with server response times under 200ms receive significantly more LLM crawler requests. Google AI Mode pulls roughly 76% of its citations from pages that already rank in the traditional top 10 — and those top 10 positions are influenced by CWV. Speed isn’t just a traditional SEO factor anymore; it’s a GEO (Generative Engine Optimization) factor too.

Start Optimizing Your Technical SEO Today

Technical SEO isn’t a one-time project. It’s an ongoing practice that requires regular auditing, monitoring, and optimization. The sites that dominate search in 2026 — both traditional SERPs and AI-generated answers — are the ones that treat technical excellence as a competitive advantage, not an afterthought.

Start with the checklist above. Run your site through Google Search Console, PageSpeed Insights, and a crawl tool like Screaming Frog. Fix the critical issues first. Then build a monthly cadence for monitoring and maintenance.

If you need help identifying and fixing technical SEO issues on your site, Atlas Marketing provides comprehensive technical SEO audits and implementation support. We don’t just hand you a list of problems — we fix them and track the results. Get in touch to discuss your site’s technical health.