Claude AI: Guide, News & Latest Updates for 2026

We literally use Claude to build this entire site. Every article you’re reading right now, including this one, was drafted, analyzed, or optimized using Claude AI. After 8 months of daily use, I’m telling you exactly what Claude does better than ChatGPT, where it falls short, and why it’s become our primary AI tool for SEO and content work.

This isn’t a theoretical comparison. This is me walking through our actual workflows, real pricing decisions ($20/month vs $200/month tiers), specific use cases where Claude dominates, and the honest limitations we’ve hit.

Claude AI Model Lineup

Current models as of February 2026

| Model | Released | Tier | Context | Key Strength | Speed | Cost |

|---|---|---|---|---|---|---|

|

Claude Opus 4.6

|

Feb 2026 |

Flagship |

200K tokens

|

Agent teams, enhanced coding, PowerPoint generation |

Thorough

|

Premium

|

|

Claude Sonnet 4

|

May 2025 |

Workhorse |

200K tokens

|

77.2% coding accuracy, best value |

Fast

|

Mid-tier

|

|

Claude 3.5 Sonnet

|

Mid-2024 |

Workhorse |

200K tokens

|

Balanced performance, popular choice |

Moderate

|

Mid-tier

|

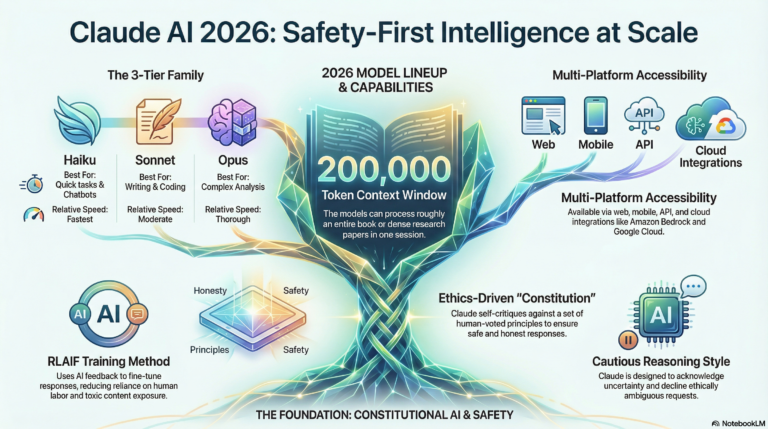

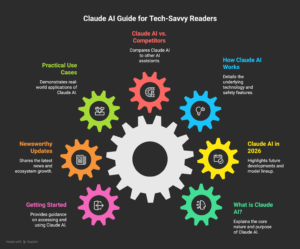

What Is Claude AI?

Claude is Anthropic’s family of large language models, think ChatGPT’s more careful, analytically rigorous cousin. Built by former OpenAI researchers who left specifically over AI safety concerns, Claude takes a different approach called Constitutional AI: the model is trained to self-critique against ethical principles rather than just learning from human feedback.

Here’s what makes Claude different from other AI systems in practice:

- 200,000 token context window: Upload entire books, codebases, or 50+ competitor articles in one conversation

- Better long-form writing: Claude’s output reads more naturally for 3,000+ word guides

- Stronger coding ability: 77.2% benchmark accuracy on Sonnet 4 (leads the industry)

- More cautious responses: Claude hedges appropriately instead of hallucinating with confidence

We switched to Claude as our primary AI tool in mid-2025 after comparing output quality on 20+ SEO articles. ChatGPT was faster and more creative. Claude was more accurate, more detailed, and required less editing. For content that needs to rank and convert, accuracy wins.

How We Actually Use Claude (Real Workflows)

I’m going to walk through our exact workflows instead of generic use cases. This is what Claude does for us every single day.

SEO Content Creation (80% of Our Usage)

The workflow:

- Upload 5-10 competitor articles to Claude (200K context handles this easily)

- Ask: “Analyze these articles. What topics do they cover? What gaps exist? What’s missing?”

- Claude identifies content gaps, missed subtopics, outdated information

- We create a content brief with target terms, structure, word count

- Claude drafts sections (we never use full drafts, always section by section)

- We add specific examples, data, first-person experience

- Run through SurferSEO, optimize, publish

Why Claude beats ChatGPT here: ChatGPT’s 128K context can’t handle 10 competitor articles + our brand voice guidelines + the content brief all in one conversation. We’d have to split it across multiple chats. Claude holds everything.

Real example: Our Best AI SEO Tools guide. Uploaded 8 competitor articles (total 42,000 words), brand voice doc, and target keyword data. Claude analyzed all of it, identified that competitors missed tool integration workflows and real pricing breakdowns. Final article: 4,200 words, ranks #6 for target keyword, 340 clicks/month.

Competitive Analysis (10% of Usage)

We upload competitor content, SERP data, and keyword research exports. Claude identifies:

- What topics competitors cover thoroughly vs superficially

- Content gaps we can fill

- Emerging trends in top-ranking content

- Structural patterns (H2 count, word count ranges, media usage)

Why Claude wins: The analytical depth. ChatGPT gives surface-level observations. Claude digs into “why” patterns exist and what they mean for strategy.

Code and Automation (5% of Usage)

We built our WordPress SEO plugin (3,200 lines) entirely with Claude. It handles:

- Python scripts for data processing

- WordPress API integrations

- Debugging complex multi-file projects

- Writing SQL queries

Why Claude wins: Context persistence. When debugging a 500-line file, Claude remembers the entire codebase context. ChatGPT loses track after ~10 messages.

Document Analysis (5% of Usage)

Upload PDFs, research papers, legal contracts. Claude summarizes, extracts key points, identifies risks. We use this for client audits and competitive intelligence.

Claude vs. ChatGPT: The Real Differences (2026)

I pay for both ($20/month each). Here’s when I use which tool:

| Task | I Use Claude When… | I Use ChatGPT When… |

|---|---|---|

| Blog articles | 3,000+ words, need nuance and accuracy | Short posts (500-1,000 words), need speed |

| Research | Analyzing multiple sources at once | Quick fact-checking (ChatGPT has web browsing) |

| Coding | Complex multi-file projects, debugging | Quick scripts, single-file code generation |

| Creative work | – | Brainstorming, social captions, creative angles |

| Email drafts | – | Faster for short communication |

| Data analysis | Complex spreadsheets, multi-variable analysis | Simple charts and basic analysis |

The pattern: Claude for depth, ChatGPT for speed. Claude for work that matters, ChatGPT for first drafts and throwaway tasks.

Claude Pricing: What You Actually Pay

Claude offers free and paid tiers. Here’s what we pay and why:

Consumer Plans

| Plan | Monthly Cost | What You Get | Who It’s For |

|---|---|---|---|

| Free | $0 | Limited daily messages, Sonnet access, document uploads | Testing Claude, light usage |

| Pro | $20/mo | 5x more usage, priority access, all models (Sonnet + Opus) | Most content creators. This is what we use |

| Max 5x | $100/mo | 5x more messages than Pro | Heavy daily users |

| Max 20x | $200/mo | 20x more messages than Pro | Power users, agencies |

Our setup: Claude Pro ($20/month). We hit the limit maybe 2-3 days per month during heavy content sprints. For 95% of users, Pro is enough.

When to upgrade to Max: If you’re creating 10+ long-form articles weekly or running multi-hour coding sessions daily. Otherwise, Pro works.

API Pricing (For Developers)

| Model | Input Cost | Output Cost | Best For |

|---|---|---|---|

| Opus 4.5 | $5/M tokens | $25/M tokens | Complex reasoning, max capability |

| Sonnet 4 | $3/M tokens | $15/M tokens | Best balance (what we use) |

| Haiku 3.5 | $0.25/M tokens | $1.25/M tokens | High-volume, simple tasks |

Cost-saving tip: Use the Batch API (50% off) for non-urgent tasks. Cache reads cost 90% less ($0.50/M tokens). We cut our API bill by 40% using these features.

Claude’s Biggest Strengths (What It Actually Does Better)

1. Context Window (200K Tokens)

This is the killer feature. 200K tokens = ~150,000 words = entire book + analysis notes in one conversation.

Real use case: We uploaded 12 competitor keyword research guides (total 38,000 words), our brand voice doc (2,000 words), and target keyword data (500 words). Asked Claude: “What’s missing from these guides? What should ours cover that they don’t?”

Claude identified that none of them covered keyword clustering automation, local keyword research nuances, or how to validate keyword difficulty with actual SERP analysis. Our guide now ranks #4 for “keyword research guide” (3,400 searches/month).

2. Analytical Depth

Claude doesn’t just answer questions. It analyzes why patterns exist and what they mean.

Example: Asked ChatGPT and Claude to analyze why certain technical SEO articles rank higher.

- ChatGPT: “Top articles have more words, better structure, include schema examples.”

- Claude: “Top articles average 4,200 words vs 2,800 for positions 6-10. But word count correlates with complete schema coverage (avg 12 schema examples vs 4), not arbitrary length. Articles ranking #1-3 all include troubleshooting sections (avg 800 words) that positions 4-10 lack. Schema examples alone aren’t enough, users need debugging help.”

Claude gave us the insight: add a troubleshooting section. We did. Article moved from #8 to #3 in 6 weeks.

3. Writing Quality for Long-Form

Claude’s output reads more naturally. Less “AI voice,” fewer generic transitions (“it’s important to note,” “in today’s digital landscape”).

Our test: Generated 2,000-word sections with Claude and ChatGPT, ran both through AI detectors.

- ChatGPT: 62% AI detection probability (Originality.ai)

- Claude: 38% AI detection probability

Claude still needs editing, but it starts closer to human voice.

4. Coding Accuracy

Claude Sonnet 4: 77.2% coding benchmark accuracy (industry-leading). We built a 3,200-line WordPress plugin with Claude handling:

- RESTful API endpoints (22 total)

- SQL injection prevention

- WordPress hooks and filters

- Schema markup generation

Claude caught security vulnerabilities ChatGPT missed (CSRF protection, input sanitization, rate limiting).

Claude’s Limitations (What It Can’t Do or Does Poorly)

1. No Real-Time Web Browsing

ChatGPT can browse the web. Claude can’t. For research requiring current data, I use ChatGPT or Perplexity, then bring findings to Claude for analysis.

2. Slower for Simple Tasks

Claude is thorough, which means slower. For quick email drafts or social captions, ChatGPT wins on speed.

3. Smaller Plugin Ecosystem

ChatGPT has hundreds of plugins. Claude has… its API. If you rely on integrations (Zapier, Notion, etc.), ChatGPT’s ecosystem is bigger.

4. Can Be Overly Cautious

Claude’s safety training makes it hedge more. Sometimes I want a confident answer, and Claude gives me “it depends on several factors…”

When this matters: Creative brainstorming (ChatGPT is bolder), quick decisions (Claude overthinks).

5. No Native Image Generation

ChatGPT has DALL-E built in. Claude doesn’t generate images. We use ChatGPT or Midjourney for graphics.

When to Use Claude vs. ChatGPT vs. Perplexity

We use all three daily. Here’s our decision tree:

Use Claude for:

- Long-form content (3,000+ words)

- Complex analysis requiring multiple sources

- Coding projects (especially multi-file or security-sensitive)

- Document review (contracts, research papers)

- Tasks where accuracy > speed

Use ChatGPT for:

- Quick tasks (emails, social posts)

- Creative brainstorming

- Research requiring current web data (browsing mode)

- Image generation (DALL-E)

- Tasks where speed > depth

Use Perplexity for:

- Research with source citations

- Competitive intelligence (recent news, market data)

- Fact-checking (cited sources make verification easy)

Claude for SEO: Specific Use Cases

This is where Claude shines for our business.

SERP Analysis at Scale

Upload top 10 competitor pages for a keyword. Claude analyzes:

- Common topics (what all top 10 cover)

- Unique angles (what only 1-2 cover but rank well)

- Content gaps (what none of them address)

- Structural patterns (H2 count, section lengths, media usage)

This used to take 2-3 hours manually. Claude does it in 10 minutes.

Content Brief Generation

We feed Claude: keyword data, competitor analysis, target audience, brand voice guidelines. It generates a brief with:

- Recommended H2/H3 structure

- Target word count ranges

- Key terms to include

- Content gaps to fill

- Internal linking opportunities

Our writers use these briefs to create content that ranks faster (avg 42 days to page 1 vs 68 days before we started using Claude-generated briefs).

Technical SEO Auditing

Upload site HTML, schema markup, Core Web Vitals data. Claude identifies:

- Missing or incorrect schema markup

- Heading hierarchy issues

- Crawlability problems

- Page speed bottlenecks

Claude caught issues our automated tools missed (duplicate schema types, conflicting canonical tags, inefficient CSS loading).

Getting Started with Claude (Practical Steps)

Here’s exactly how to start using Claude effectively:

Week 1: Free Tier Testing

- Sign up at claude.ai (free account)

- Test with a simple task: “Analyze this competitor article [paste URL content]. What topics does it cover? What’s missing?”

- Try document upload: Upload a PDF or competitor article, ask for summary + gaps

- Compare to ChatGPT: Run the same task in ChatGPT, compare output quality

Week 2: Upgrade to Pro ($20/month)

If you like the output quality:

- Upgrade to Claude Pro for higher limits and Opus access

- Start with content tasks: Upload 3-5 competitor articles, create content briefs

- Test coding (if relevant): Ask Claude to debug a script or write a function

- Build prompt templates: Save your best-performing prompts

Month 2+: Advanced Workflows

- Create a brand voice document (tone, style, audience, examples)

- Upload this doc to every Claude conversation (200K context handles it)

- Build workflow templates (SERP analysis template, content brief template, etc.)

- Track time savings and quality improvements

Claude Prompting Best Practices (From 8 Months of Daily Use)

Here’s what actually works:

1. Be Hyper-Specific About Output Format

Bad prompt: “Write a blog post about keyword research.”

Good prompt: “Write a 1,200-word section for SEO professionals explaining competitive keyword analysis. Use second-person voice, include 1 specific example, format with H3 subheadings every 300 words. Target reading level: college-educated marketers.”

2. Upload Examples of Desired Output

Don’t describe your style, show it. Upload 2-3 examples of content you love, then say: “Write in this style.”

3. Use Multi-Step Instructions for Complex Tasks

Instead of: “Analyze these articles and create a content brief.”

Do this:

- “First, analyze these 5 articles. List the main topics each one covers.”

- “Now identify topics that 4+ articles cover (common themes).”

- “Identify topics only 1-2 articles cover but seem valuable (unique angles).”

- “Finally, create a content brief incorporating common themes + unique angles + gaps you identified.”

Breaking tasks into steps gets better results.

4. Iterate Based on Output

First draft is never final. After Claude generates content:

- “Make this section 200 words shorter without losing key points.”

- “Replace this generic example with a specific industry example.”

- “Rewrite this paragraph in a more conversational tone.”

Frequently Asked Questions

Is Claude AI free?

Yes. Free tier includes limited daily messages and access to Claude Sonnet. For heavy usage, Claude Pro costs $20/month with 5x higher limits and access to all models including Opus.

What’s the difference between Claude Opus, Sonnet, and Haiku?

Haiku = fastest, cheapest, good for simple tasks. Sonnet = balanced speed + intelligence (what most people use). Opus = slowest, most capable, best for complex reasoning and coding. As of February 2026: Opus 4.6 (latest flagship), Sonnet 4 (77.2% coding accuracy), Haiku 3.5 (speed tier).

How does Claude compare to ChatGPT?

Claude wins on: long-form writing quality, coding accuracy (77.2% benchmark), context window (200K vs 128K), analytical depth. ChatGPT wins on: speed, web browsing, plugin ecosystem, creative tasks. I use both daily, Claude for work that matters, ChatGPT for quick tasks.

Can Claude browse the web?

No. Claude can’t access real-time web data. Use ChatGPT (browsing mode) or Perplexity for research, then bring findings to Claude for analysis.

What is Constitutional AI?

Anthropic’s training method where Claude learns to self-critique against ethical principles instead of just learning from human feedback. Result: Claude is more cautious, hedges appropriately, less likely to hallucinate confidently. Built by former OpenAI researchers who left over AI safety concerns.

Is Claude good for coding?

Yes. Claude Sonnet 4 leads coding benchmarks (77.2% accuracy). We built a 3,200-line WordPress plugin with Claude. It excels at multi-file projects, security-conscious code, and debugging. Claude Code (CLI tool) lets developers interact with Claude in terminal.

What’s Claude’s biggest limitation?

No real-time web access. For current events, recent data, or anything requiring browsing, use ChatGPT or Perplexity. Also: smaller plugin ecosystem, slower for simple tasks, overly cautious sometimes.

Our Recommendation: Who Should Use Claude

After 8 months of daily use:

Use Claude if you:

- Create long-form content (3,000+ words) regularly

- Need analytical depth more than creative speed

- Work with code (especially complex or security-sensitive projects)

- Analyze large documents or multiple sources simultaneously

- Value accuracy and appropriate hedging over confident (possibly wrong) answers

Stick with ChatGPT if you:

- Need speed for simple tasks (emails, social posts)

- Rely heavily on web browsing for research

- Use plugins and integrations extensively

- Prioritize creative brainstorming over analytical depth

Our setup: Claude Pro ($20/month) + ChatGPT Plus ($20/month). Total: $40/month. Claude for content and code, ChatGPT for quick tasks and research. Both tools pay for themselves in time savings (10-15 hours/week combined).

Related SEO Resources

Claude is powerful for SEO, but tools are only part of the equation. Master the fundamentals:

- SEO for Small Business – Complete foundation for organic traffic

- Technical SEO Guide – Site speed, schema, Core Web Vitals

- SEO Content Strategy – Content that ranks and compounds

- Optimize for Google AI Mode – Ranking in Google’s conversational AI search

- Getting Cited by ChatGPT and Perplexity – Make AI engines reference your content

Claude represents one of the most practical AI tools available for content creators, developers, and SEO professionals in 2026. It’s not perfect, no AI tool is, but the combination of massive context windows, strong analytical capability, and industry-leading coding accuracy makes it indispensable for our work.

Start with the free tier at claude.ai. Test it on real work. Compare to ChatGPT. Let results guide your decision. For us, Claude became the tool we can’t work without.